Pi-hole: 7 years later

In 2017 I installed a Pi-hole into my network and routed all my DNS traffic through it. Today is February 23, 2025 and I've been running it ever since. This will be the 9th post in the pi-hole tag.

This was the year that the elusive rewritten v6 of Pi-hole was finally released and boy what a Hacker News post let down. The post generally got ripped apart for why Pi-hole didn't have x feature and all these other alternatives were linked instead. It always bugs me a bit when a Hacker News thread is about a new version of software and most of the conversation surrounding that thread is not about that software.

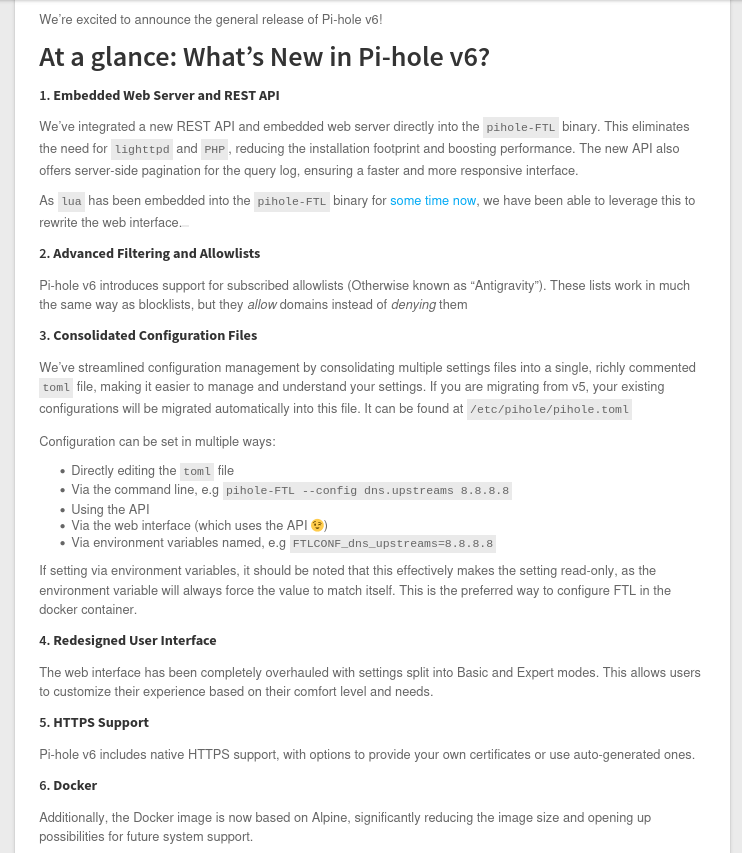

Regardless, I clicked the link and read the post below.

Such a weird thing to be upset about, but this was worked on for 4-5 years and we get 6 bullet points and that's it? I was expecting some photos, before/after, performance differences and more. However, this is a free software and I'm being ridiculous but wouldn't you really want to show off your new major creation?

So as you break down these features ignoring the updated docker image you get:

- Bye bye PHP & lighttpd - everything is embedded in FTL

- Allow-list support

- Unified configuration file

- Redesigned Interface

- HTTPs support

Embedding a web server seems like an interesting choice so I wondered when that started and the first commit was way back in May of 2020 when DL6ER moved to remove a PHP-CGI interpreter for PH7.

PH7 is a in-process software C library which implements a highly-efficient embeddable bytecode compiler and a virtual machine for the PHP programming language. In other words, PH7 is a PHP engine which allow the host application to compile and execute PHP scripts in-process. PH7 is to PHP what SQLite is to SQL.

I guess for a tinker that seems like an interesting task, but boy I don't envy the work on their plate now managing their own web server within FTL. Of course we know that choice was changed again to civetweb later on in development. So lets boot this up and take the upgrade and see what is different. For a reminder of what it looked like previously please check out the Year 6 post.

So I carefully executed pihole -up and waited.

[i] Checking for updates...

[i] Pi-hole Core: update available

[i] Web Interface: update available

[i] FTL: update available

Wrote config file:

- 152 total entries

- 132 entries are default

- 20 entries are modified

- 0 entries are forced through environment

Config file written to /etc/pihole/pihole.toml

[i] Upgrading gravity database from version 15 to 16

[i] Upgrading gravity database from version 16 to 17

[i] Upgrading gravity database from version 17 to 18

[i] Upgrading gravity database from version 18 to 19

[i] Restarting services...

[✓] Enabling pihole-FTL service to start on reboot...

[✓] Restarting pihole-FTL service...

[✓] Migrating the list's cache directory to new location

[✓] Deleting existing list cache

[✗] DNS resolution is currently unavailable

[✓] DNS resolution is available

[✓] Done.

[i] The install log is located at: /etc/pihole/install.log

[✓] Update complete!

Core version is v6.0 (Latest: v6.0)

Web version is v6.0 (Latest: v6.0)

FTL version is v6.0 (Latest: v6.0)

Kudos has to been given to an easy upgrade path as that is not easy. I ran one command and walked away and this thing was done when I returned.

However, the web server did not boot so like any sane person I upgraded my OS to the latest patch version of things, rebooted it and ran the debug command pihole -d.

One line stood out to me that seemed like the problem.

*** [ DIAGNOSING ]: Ports in use

[✓] udp:0.0.0.0:53 is in use by pihole-FTL

[✗] tcp:0.0.0.0:80 is in use by apache2 (https://docs.pi-hole.net/main/prerequisites/#ports)

[✓] tcp:0.0.0.0:53 is in use by pihole-FTLDidn't even know I had Apache on this Pi, so I quickly uninstalled that and restarted my Pi-hole. Now I had a successful loading of "https://pi.hole".

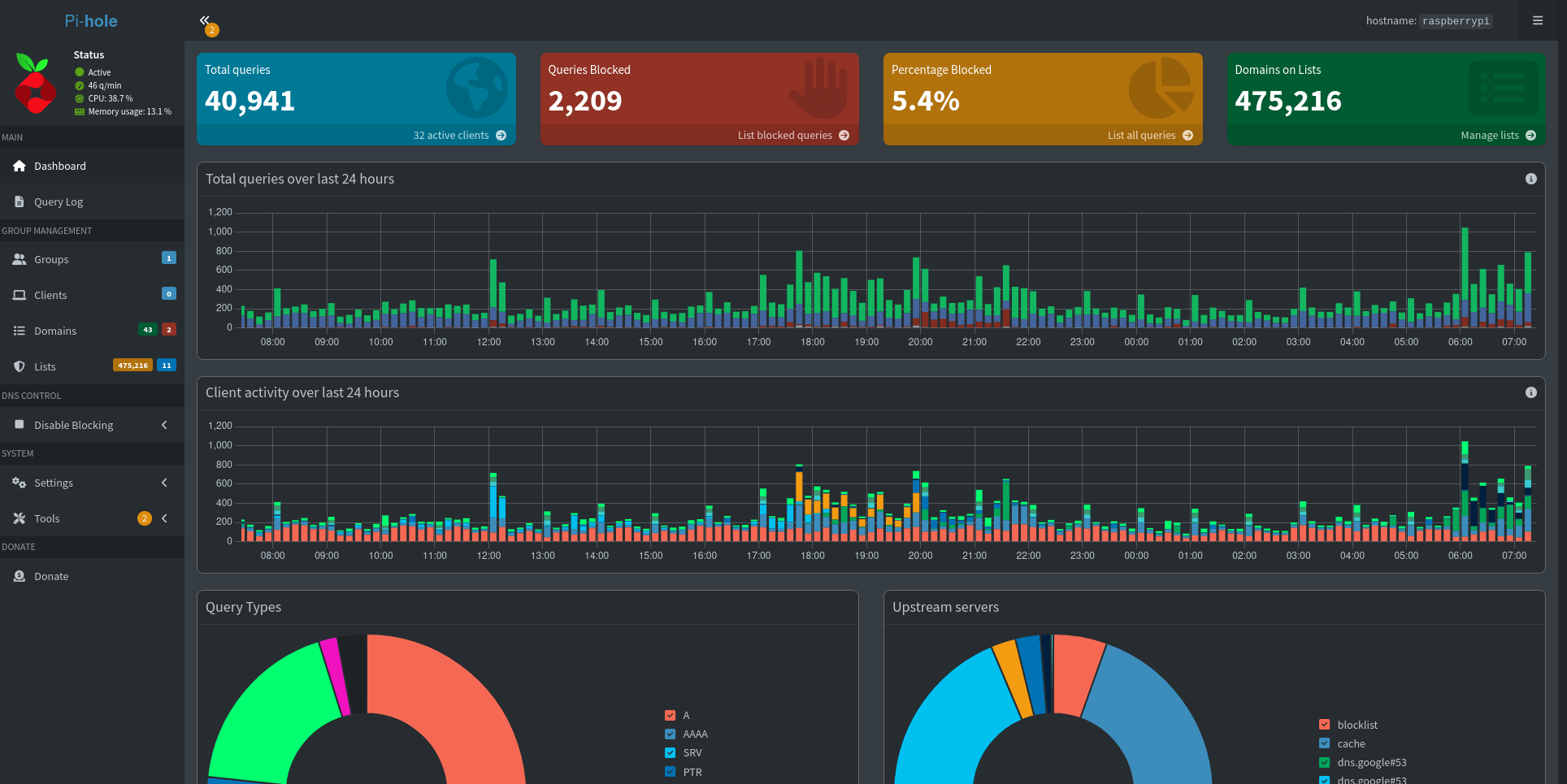

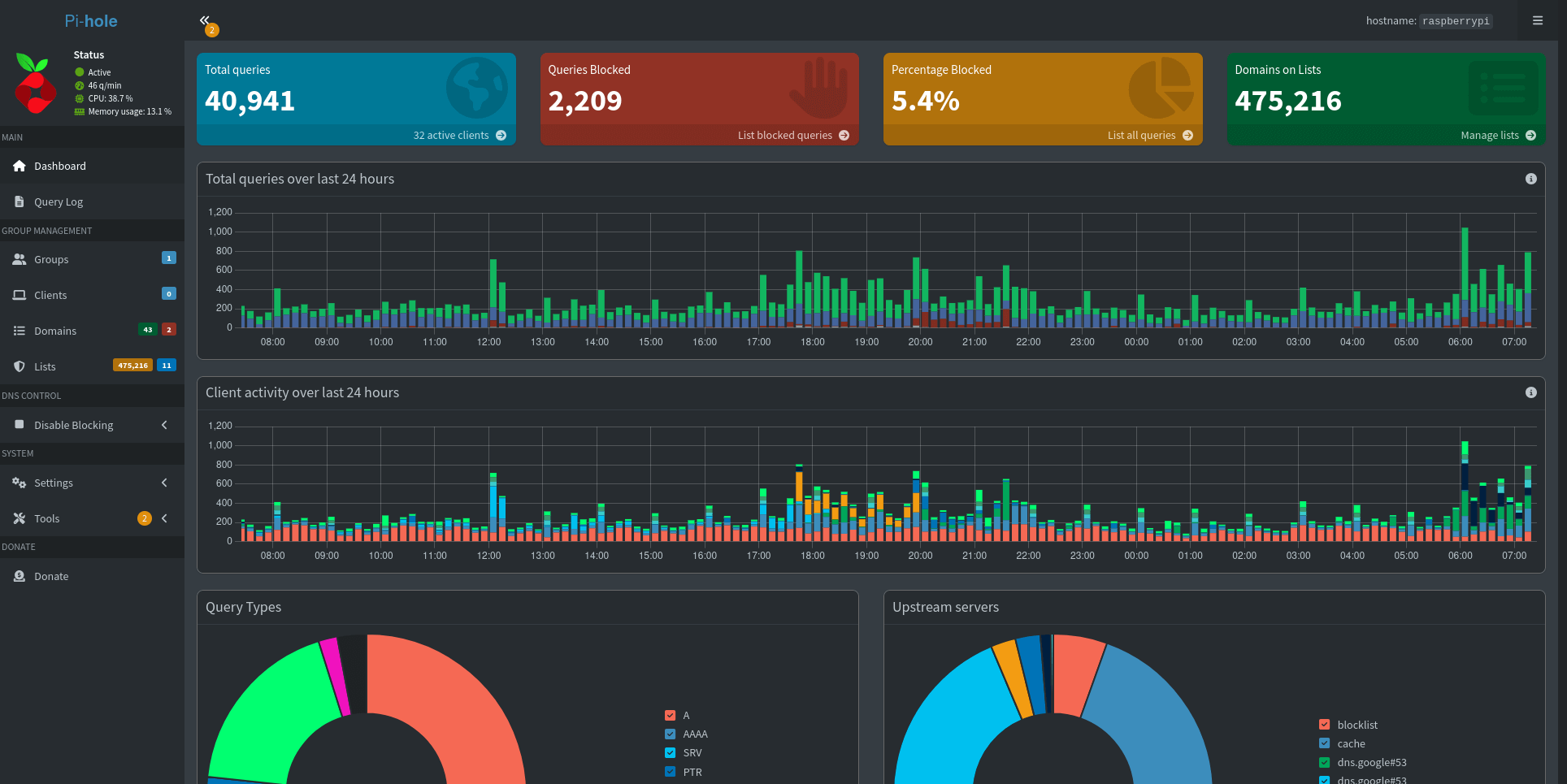

The settings area was overhauled for sure, but generally the front interface seemed unchanged. I spent a few days clicking around and just exploring this upgrade and it seems the goal was to expose as much nerdy information to the end user as possible.

Take this video where I show the default experience being "basic" mode but you can opt into a more nerdy breakdown of stats and options.

Toggling "System Settings" between Basic/Expert

Outside of that I didn't have much to do, my blocking was still working and I had no reason to configure anything else. So I got to work to export my usual growing stats and see how PADD is doing.

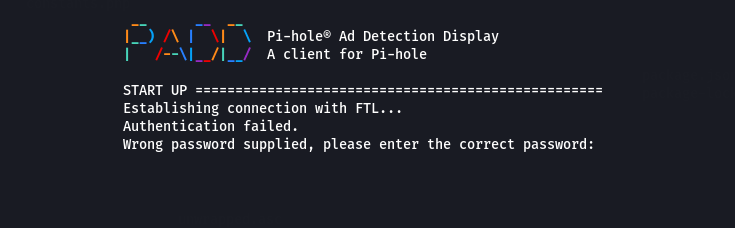

It seems PADD just wasn't yet ready for the next major version as it failed to launch. I attempted to try out the bleeding edge "development" version, but it just failed with a different error.

My password works to log into the web interface, but fails here so I wasn't sure what I was doing wrong. There is a downside to upgrading on the day a new major version of software was released and in this case it was not having all the official extra components ready to roll. I'm sure by the time I do this new blog next year it'll be resolved - PADD just missed my blog deadline (February 23, 2025) to be working on Pi-hole v6.

Now it was time to check on my internal "pi-stats" project that gets slower and slower as time goes on. Except in this case I moved this project to a new server which actually made it quite faster to process.

Total Queries: 72,898,780

First Query: 2019-06-17 01:55:35

Last Query: 2025-02-19 11:44:59

Time in days: 2074

Time taken: 54 minute(s).php artisan stats:dump (internal Laravel project)

This time clocking nearly 6 years of data and 72 million queries I can run some analytics of the top 15 allowed/blocked domains.

Top 15 Allowed Domains

| Domain | Count |

|---|---|

| e7bf16b0-65ae-2f4e-0a6a-bcbe7b543c73.local | 5,631,937 |

| 68c40e5d-4310-def5-a1c3-20640e1cd583.local | 5,305,150 |

| 1d95ffae-4388-9fbc-1646-b2b637cecb64.local | 4,898,205 |

| localhost | 4,461,024 |

| 1.1.1.1.in-addr.arpa | 2,019,616 |

| www.gstatic.com | 1,443,414 |

| ping2.ui.com | 1,422,801 |

| ping.ui.com | 1,389,554 |

| 806c4c48-1715-4220-054f-909f83563938.local | 1,342,386 |

| 8.8.8.8.in-addr.arpa | 1,186,950 |

| api-0.core.keybaseapi.com | 1,184,543 |

| b.canaryis.com | 1,125,173 |

| 168.192.in-addr.arpa | 1,057,459 |

| pistats.ibotpeaches.com | 1,050,674 |

| pool.ntp.org | 762,666 |

.local domain. Why they wouldn't stop if the other device(s) didn't respond properly after the first 100,000 requests - I don't know.If we compare back to last year the biggest change is having an NTP server crawl into the top 15 domains utilized. For those unaware those are simply servers that keep track of time so your machines can always double check the time they have is correct. Maybe the amount of devices I have on my network (32) has just increased the amount of NTP requests?

I'm not sure, but I already see I need to make some improvements to this script to detect the highest client count per domain. Otherwise nothing else stands out as something requiring an investigation.

I do know my Pi-Stats domain is now on a Raspberry Pi on my local network - so that domain surely will fall out of the top domains as no more requests are being sent to that as they are now going to 192.168.1.6.

Top 15 Blocked Domains

| Domain | Count |

|---|---|

| 806c4c48-1715-4220-054f-909f83563938.local | 803,900 |

| e7bf16b0-65ae-2f4e-0a6a-bcbe7b543c73.local | 638,460 |

| ssl.google-analytics.com | 508,524 |

| 1d95ffae-4388-9fbc-1646-b2b637cecb64.local | 432,008 |

| app-measurement.com | 391,037 |

| 68c40e5d-4310-def5-a1c3-20640e1cd583.local | 247,892 |

| watson.telemetry.microsoft.com | 217,743 |

| logs.netflix.com | 187,798 |

| googleads.g.doubleclick.net | 158,311 |

| androidtvchannels-pa.googleapis.com | 153,954 |

| mobile.pipe.aria.microsoft.com | 124,971 |

| flask.us.nextdoor.com | 113,808 |

| beacons.gcp.gvt2.com | 101,264 |

| sb.scorecardresearch.com | 99,971 |

| beacons.gvt2.com | 97,699 |

Reviewing these domains a few things I want to call out:

- (flask.us.nextdoor.com) I only have Nextdoor installed on my Android phone and that's it - this thing makes way too many analytical requests. I have removed it - nothing in that application is helpful when 90% of the posts are just advertisements

- (androidtvchannels-pa.googleapis.com) Blocking advertisements on my NVIDIA SHIELD has resulted in me getting the same advertisement for a year old NFL playoff game of Chiefs vs Dolphins. I guess that advertisement was cached and thus it results in playing the only thing it can obtain.

- (watson.telemetry.microsoft.com) Windows 11 continues to invade my privacy and every single update requires a double checking of my settings to ensure my information is not being exfiltrated legally.

- (ssl.google-analytics.com) The realm of Firebase/Google tracking/advertisement reigns supreme in the most blocks across the board.

Overall I'm still very happy to have this software on my network and via Wireguard I have my Pi-hole DNS no matter where my phone is in the world. Every year though you find products and systems becoming clever routing their advertisements through the same domains the content arrives from.

At that point a purely DNS based blocking solution does not help and what has instead led to the rise of programs like Re-Vanced.

Pi-hole continues to evolve and be a great addition to my local network - as long as I use it I'll try to do a yearly post in February.