Dead Internet Theory

A bit of time ago in 2022 I wrote a blog about how most of my traffic towards one of my websites was all bots (or non-human). For a short recap of that post I plucked a random day of analytical stats (May 16, 2022) and looked at the top 5 user agents on the traffic.

This broke down to:

- 18,004 hits - SemrushBot (Bot)

- 5,413 hits - AhrefsBot (Bot)

- 4,832 hits - Mobile iPhone (User)

- 4,020 hits - MJ12Bot (Bot)

- 3,343 hits - Firefox (User)

Which breaks down to 27,437 bot requests and 8,175 human requests or put more briefly that 77% of my traffic recorded that day was non-human. You can see this sort of dominating bot traffic when you look at private message requests or spam emails. Someone tweeted me to say some form of "check your dms" which I thought was odd - I didn't have a notification of a direct message.

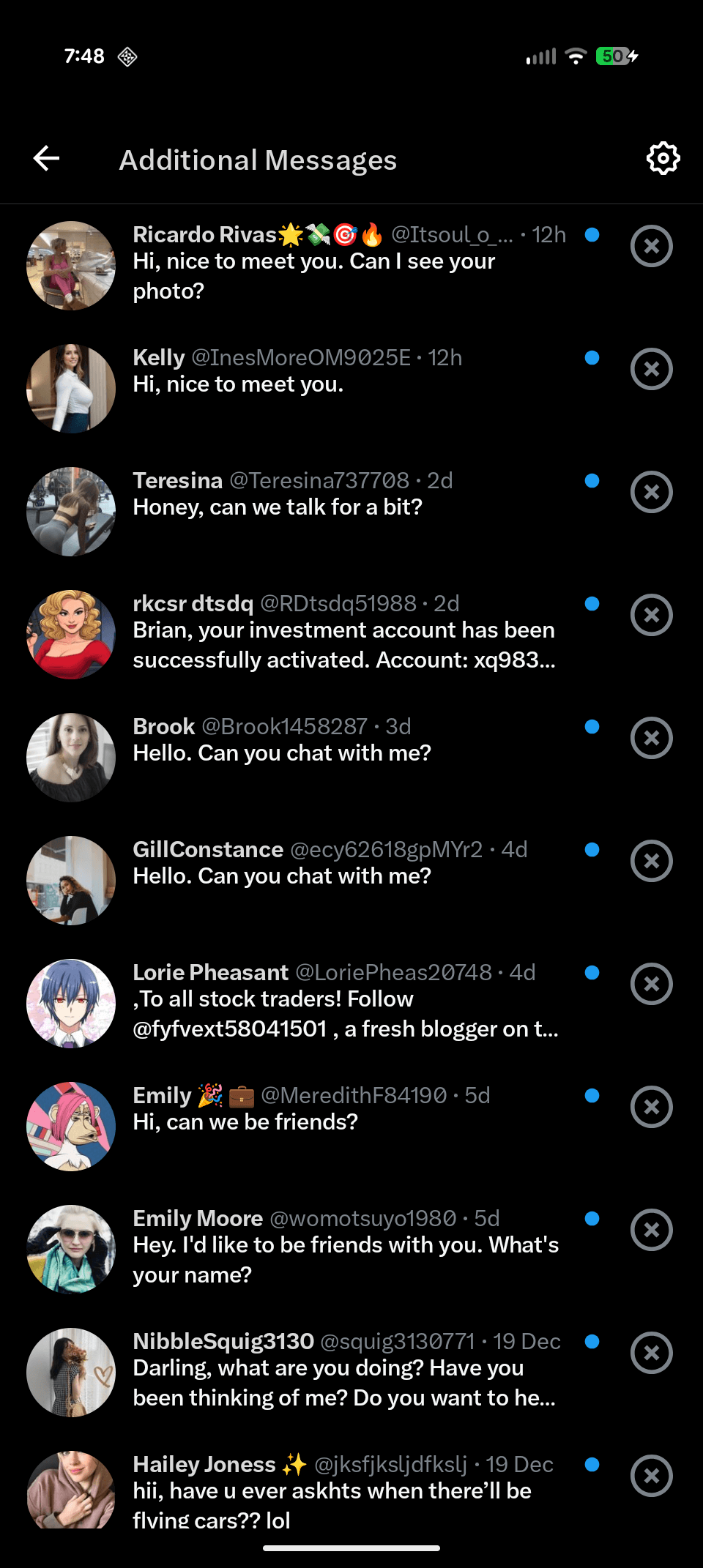

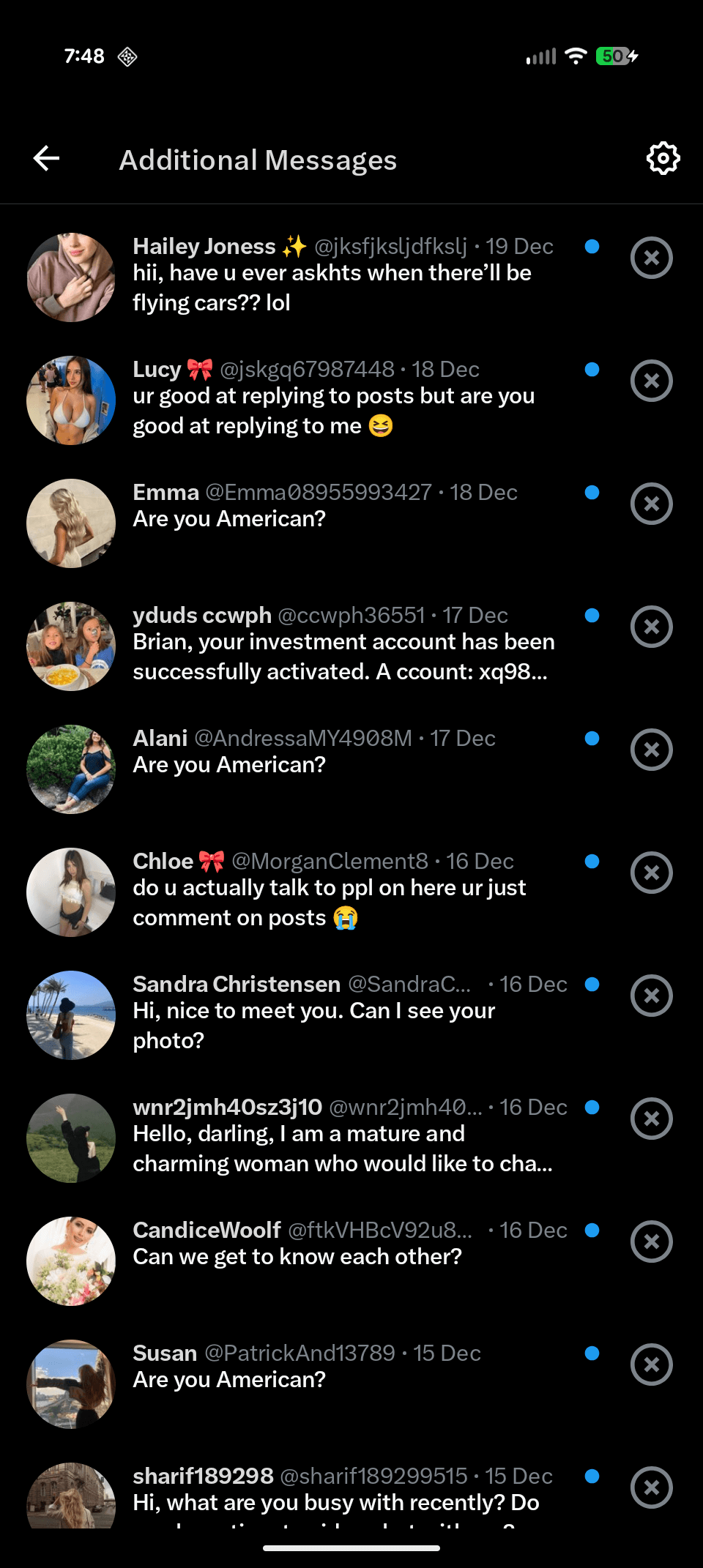

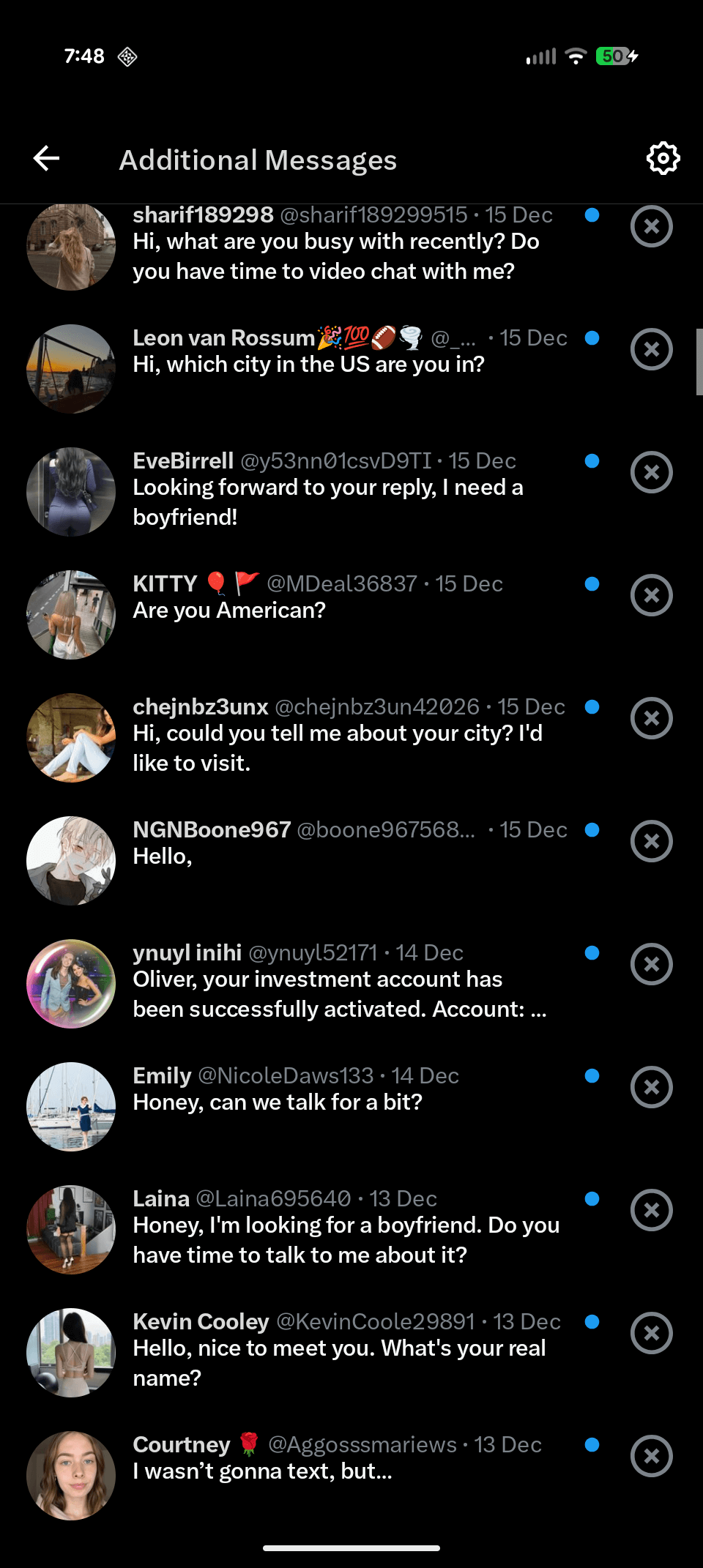

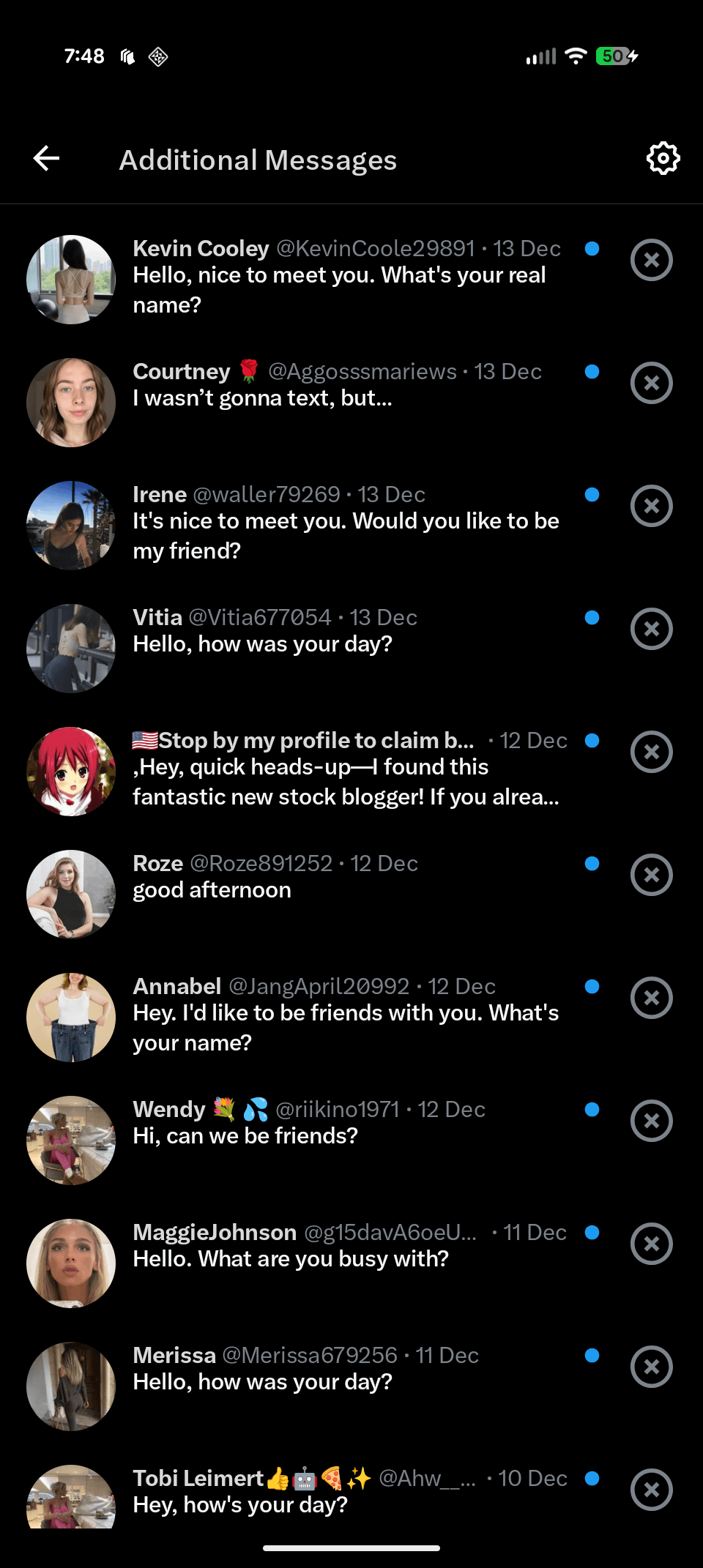

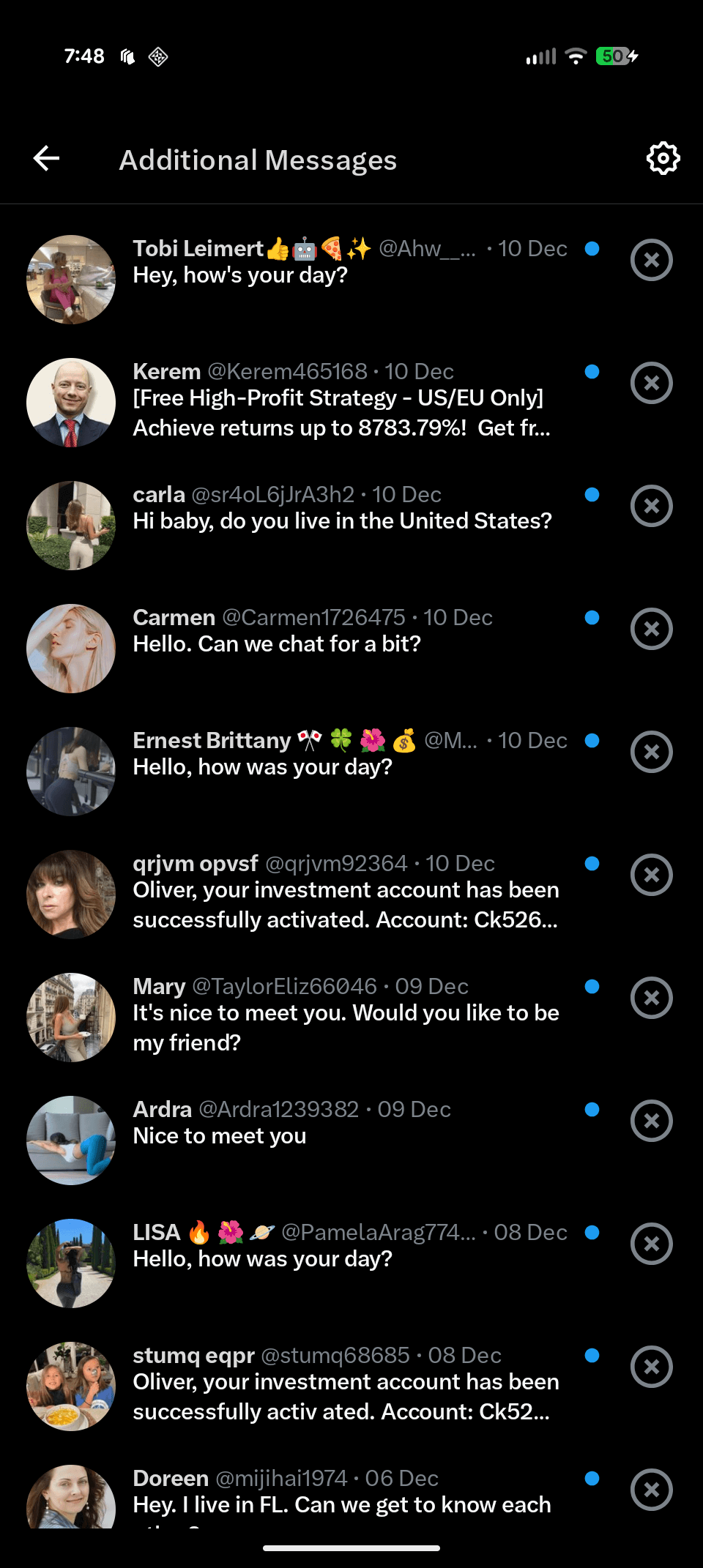

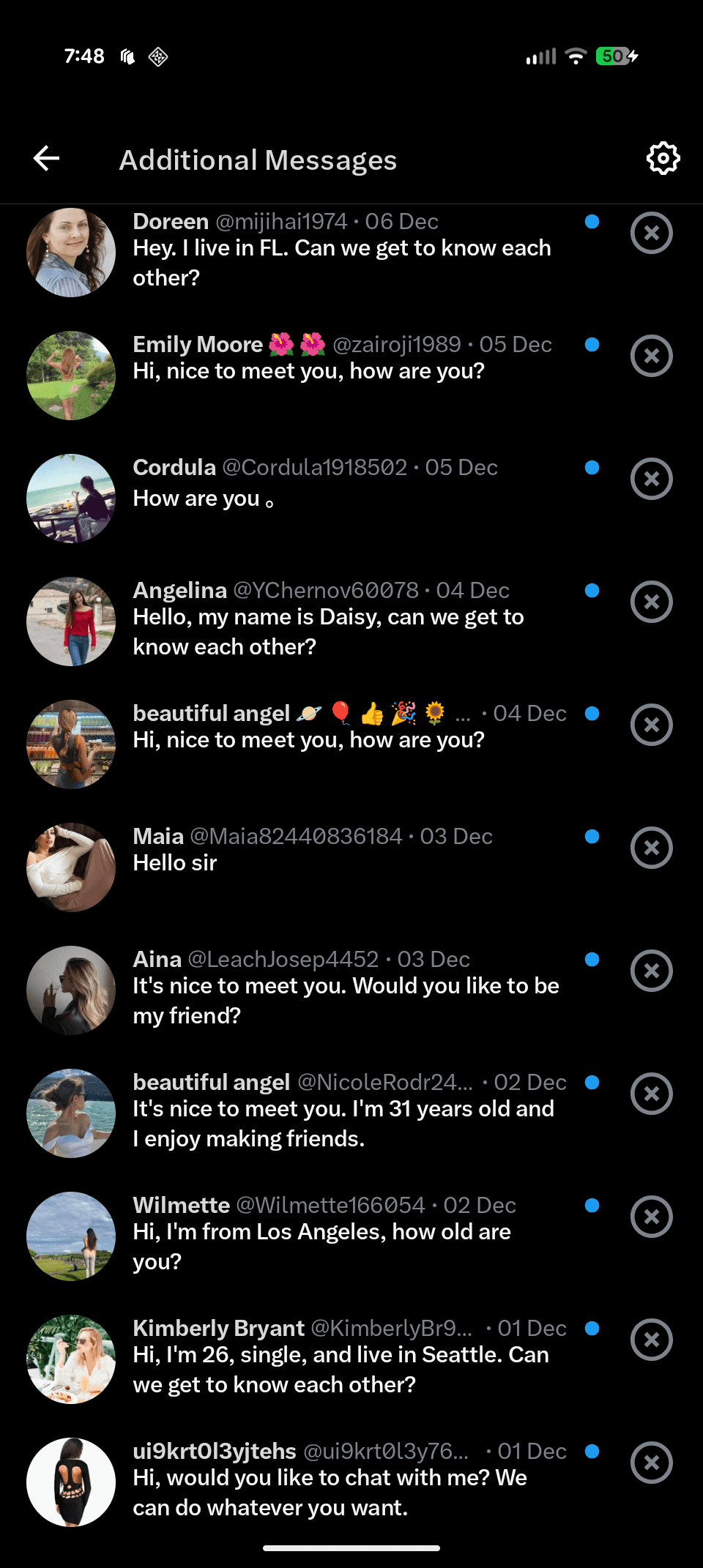

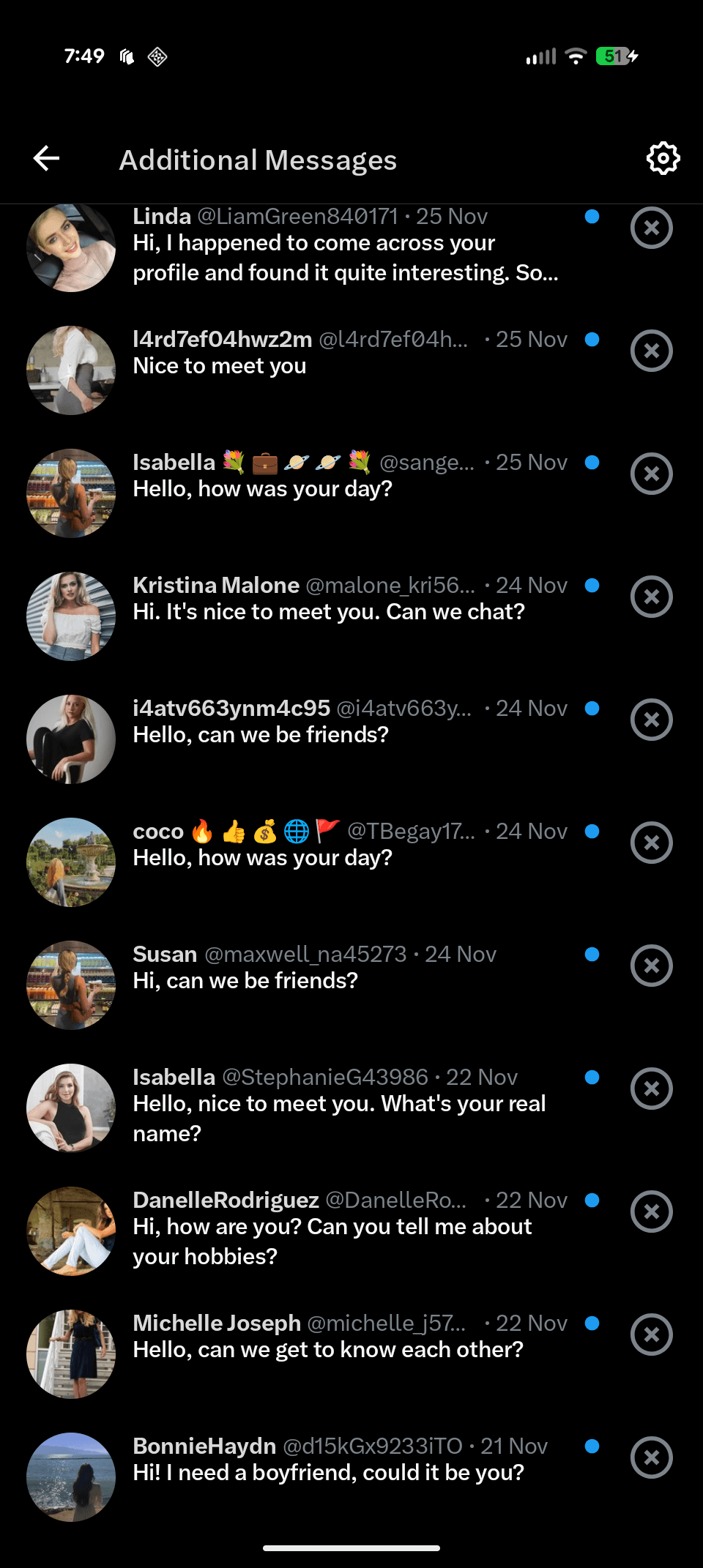

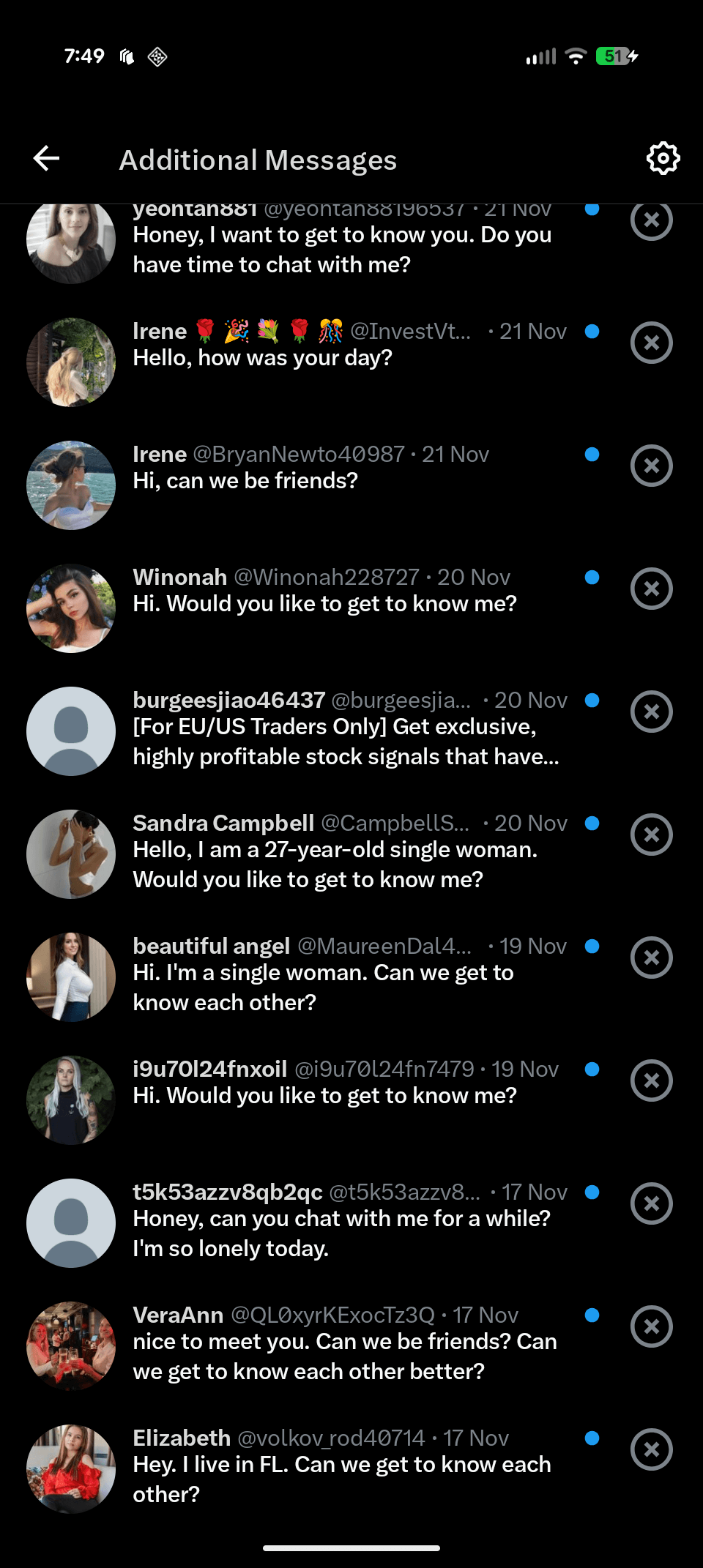

Turns out that message was filtered out from a notification for looking a bit like spam. This specific message was real looking, but clearly a spam attempt to get unneeded SEO help. Since I was looking for a specific message I could see my filtered list of private message requests was insanely large just looking roughly at the past 2 months.

Twitter (X) direct message spam from November 17 -> December 26 (2025)

I don't remember this amount of spam prior to the acquisition and renaming to X, but boy if these are 2 months of spam messages we have to assume the accounts are not yet banned. Knowing banned accounts are removed from my list then I have to assume the ~100 accounts who spammed messages towards me in the last 2 months are unbanned. I'm not alone as these accounts probably spammed way more than me, but it's absolutely ridiculous.

Real messages on Twitter/X are a day of the past. I make a post and immediately within moments I get a few pings:

- "Тhе ВIGGЕST #Сryрtо #РUМР #Signаl is hеrе!"

- "Can you please check your dm?"

- "This account _____ helped me get my account back."

It's interesting that tweeting about a domain issue can result in all these non-human automated things.

I don't even want to talk about email, because I just rely on Google to filter my inbox of the never ending spam of email. I look at my spam folder of last 30 days and I have 55 emails automatically placed there. Thankfully this is a problem that has pretty much been solved for end users of Gmail, but it still exists in the sense that any email provider needs a robust system for thwarting spam.

What is more annoying is forums, support boards or communities. For example on GitHub I've been noticing a new pattern where a new user will post an obviously AI generated comment/question to an issue. A few weeks or months go by and that post will be edited to include a link to some service - basically spam. It's a clever technique that places a semi relevant bogus comment to later on convert it into a spam post.

Thankfully I started noticing the obvious pattern to these usernames and just started deleting the posts immediately. However, I was freed from doing that when I realized a new feature existed on GitHub that allows me to disable access to doing things on my repository from new users.

GitHub has made bounds of improvements in this area so repositories targeted for any reason can put some interaction cool-down methods in place. They aren't permanent and re-enable after some time, but help push these bots onto a different repository. There are currently 3 settings for this purpose and listed below:

- Users that have recently created their account will be unable to interact with the repository.

- Users that have not previously committed to the main branch of this repository will be unable to interact with the repository.

- Users that are not collaborators will not be able to interact with the repository.

I was happy at the cost of deterring new GitHub users that I won't have to deal with spam anymore. I'm going under the assumption that any engineer has an GitHub account already and any new engineers to GitHub probably aren't diving into reverse engineering Android APKs as their first business.

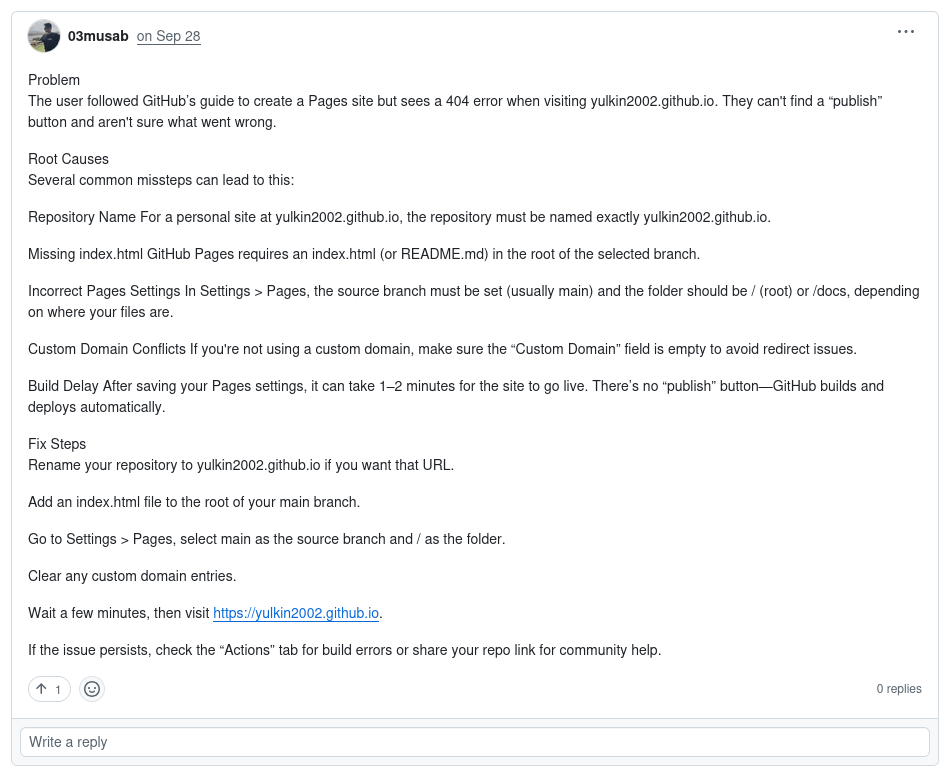

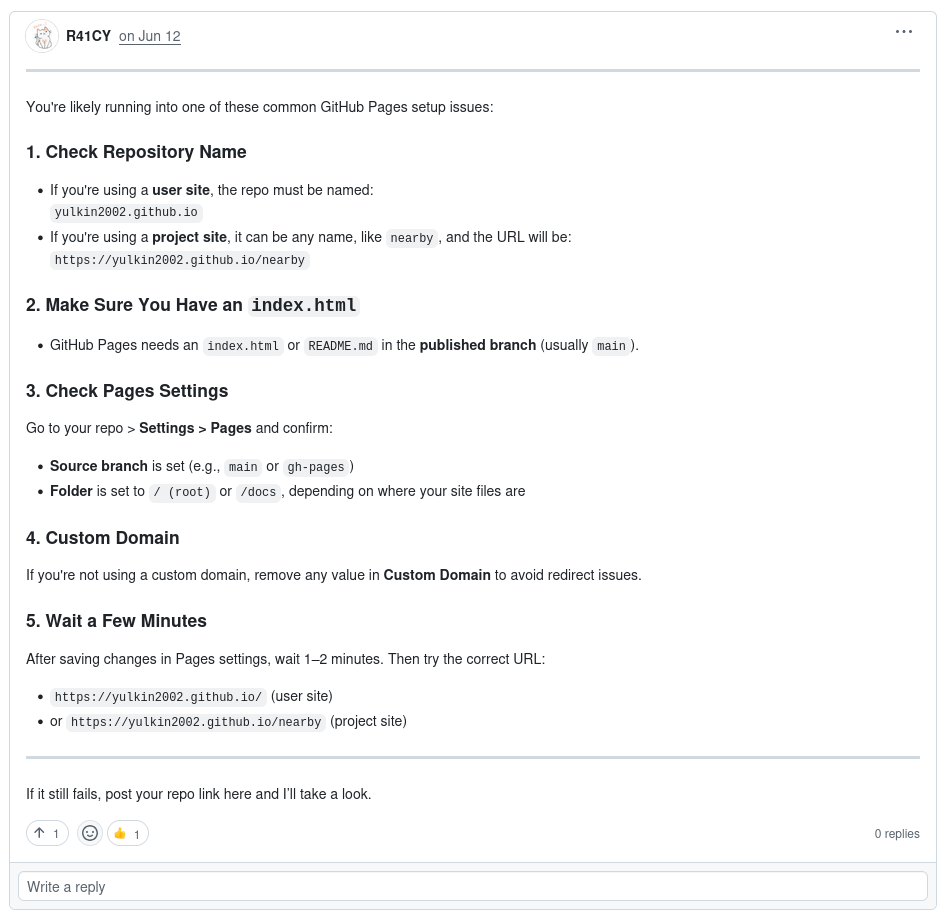

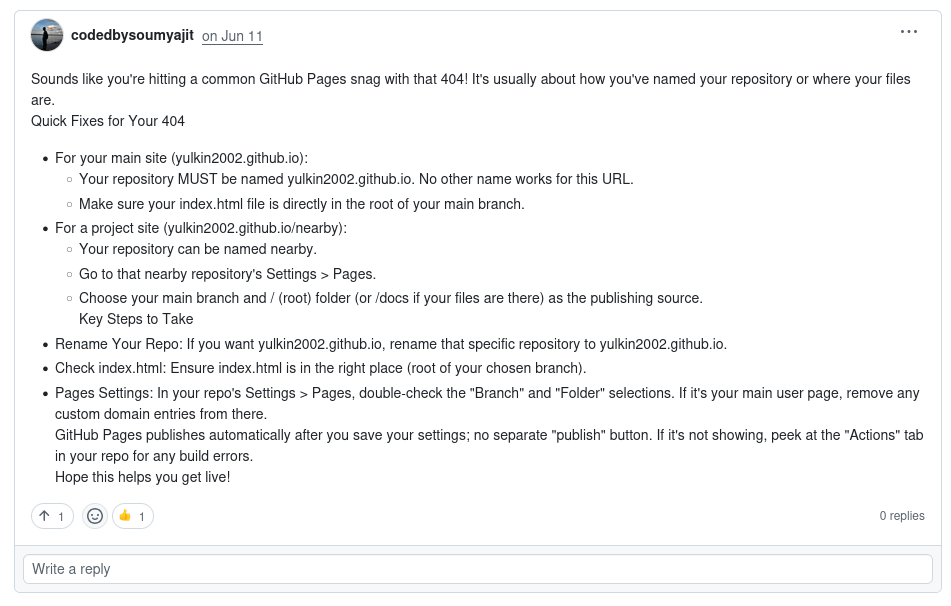

However, a different side of GitHub is full of messages that I pretty much classify as spam, but in this case is the ever increasing amount of purely LLM generated comments. I was doing some Googling on a basic 404 on GitHub Pages on a domain and stumbled upon a thread with my same error.

LLM-esque generated comments on a forum post. https://github.com/orgs/community/discussions/162497

As I scrolled the comments I was a bit annoyed to see comments that I could tell the tone was clearly an LLM. In the case of one comment the problem statement and solution were posted as well. These I'm convinced are not true robots, but instead a human trying to solve problems for end users with an LLM.

I'm torn here - if you ever worked a support ticket system you know a good deal of support tickets you get are basic questions that the reporter could have found in documentation. You spend so much time dealing with support requests that shouldn't be a request that the real requests get back-logged in the mess.

So to have what seems like an endless amount of users eager to solve every problem submitted with an LLM might be giving GitHub a bit of help. However for those feeling the ache of non-stop LLM content it could be quite the opposite of helpful.

Regardless of the service or platform or whether I'm hosting the content or consuming it. It's starting to feel a lot like a lot of dead content which means a lot less human curated content and more machines consuming and generating content to shove in my face.