2022 - Era of the CVE

About a decade ago, hearing the word CVE (Common Vulnerabilities and Exposure) was associated with big name issues in software. This was like Heartbleed & Shellshock. However, what changed year after year was the ease of use to request a CVE for any type of software in the toolchain. This of course to make more software secure and encourage folks to patch such issues.

CVE Details is a good site that I want to start with that breaks down how many CVEs it logs year after year.

| Year | Count |

|---|---|

| 2015 | 6,504 |

| 2016 | 6,454 |

| 2017 | 14,714 |

| 2018 | 16,557 |

| 2019 | 17,344 |

| 2020 | 18,325 |

| 2021 | 20,142 |

This is quite interesting to me that upwards of 20,000 vulnerabilities of insecure software are documented and released in 2021. This number is obviously way higher for everything that isn't documented, but I fear this volume is going to work against the population.

Lets start with an example of installing React Native on February 13, 2022 and see what happens after we get to the last step of npm install.

➜ npm install

changed 4 packages, and audited 947 packages in 9s

79 packages are looking for funding

run `npm fund` for details

14 moderate severity vulnerabilities

To address issues that do not require attention, run:

npm audit fix

To address all issues (including breaking changes), run:

npm audit fix --force

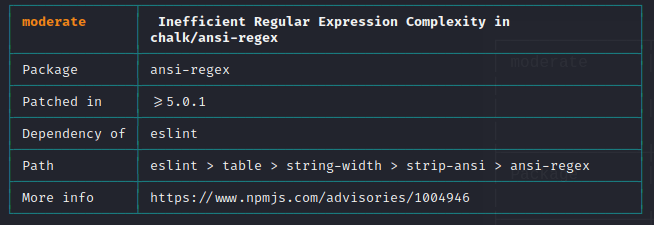

Run `npm audit` for details.This is so scary! I have a brand new project that has 14 moderate severity vulnerabilities. So lets just take a look at the first one.

This is another perfect example of the dependency hell in the Node world that some dependency 5 generations deep has an issue.

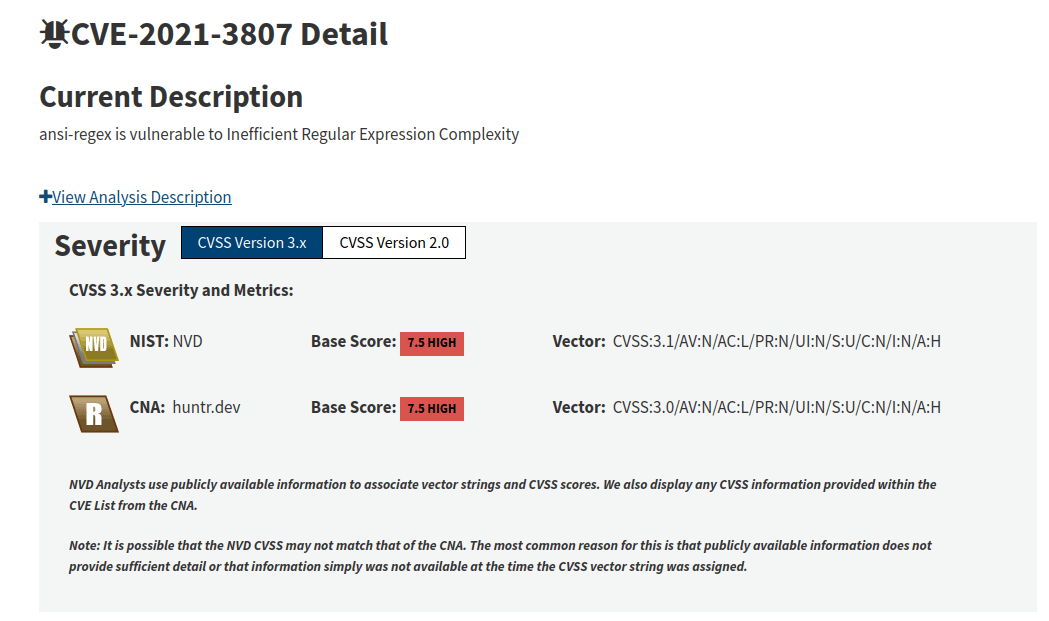

Though what is the issue? We are talking about an inefficient regular expression - this in short means that intentionally malicious input can cause the regular expression to process for near forever exhausting resources and crashing a machine.

This seems so dangerous if you accept untrusted user input and lint it, but lets look at our situation. This package is a "dev" dependency which means its only used during development or building. By the time our test React Native application is installed on a device - ESLint is gone - thus the vulnerability.

So how was this issue discovered? Was it abused or what?

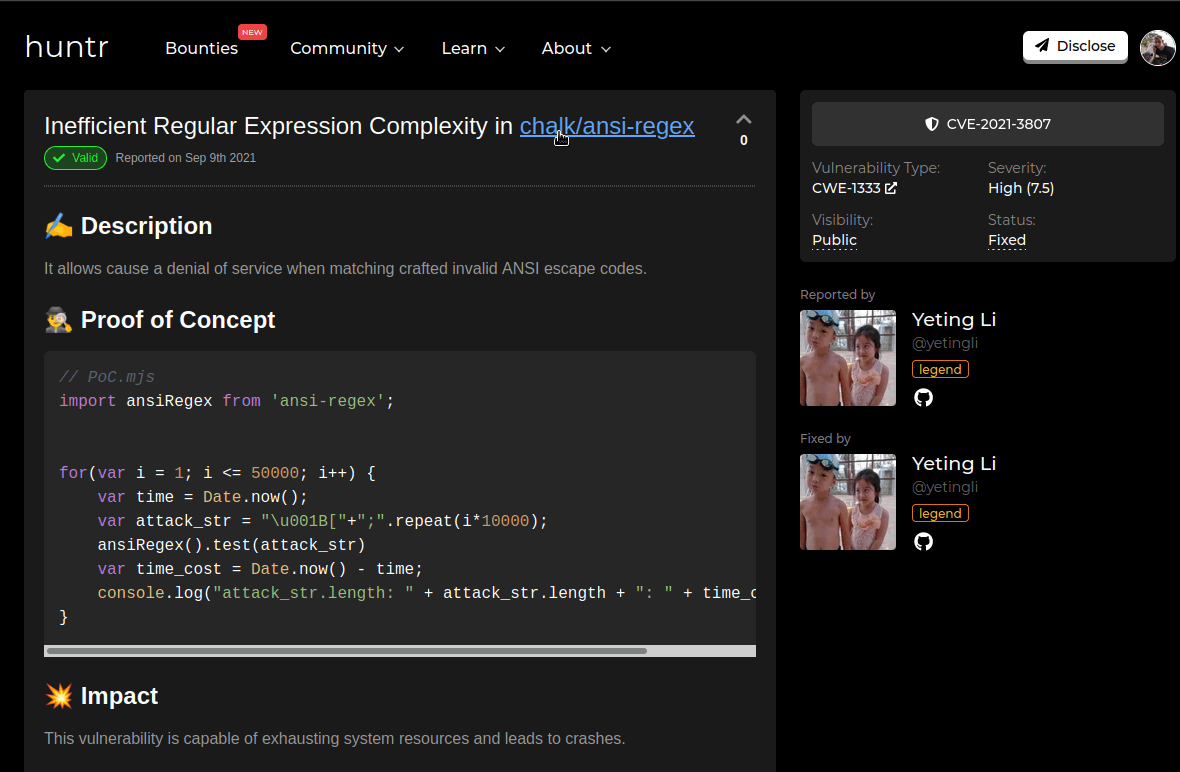

This was discovered by a researcher and then fixed by the same researcher by building an intentionally malicious expression to test before and after the resolution.

I'm all for building a PoC for vulnerabilities so complex systems have an easy way to know if vulnerable, but lets sadly talk about what I believe actually happened here in the full picture.

This service (Huntr) is a newer website to basically fund finding and fixing vulnerabilities in open source software. It automatically contacts maintainers and attempts much like other services to help get issues fixed.

It sounds great and also includes funding to help entice the researcher and maintainer to resolve the issue.

We are a UK based startup backed by VCs such as Seedcamp.

https://huntr.dev/faq/

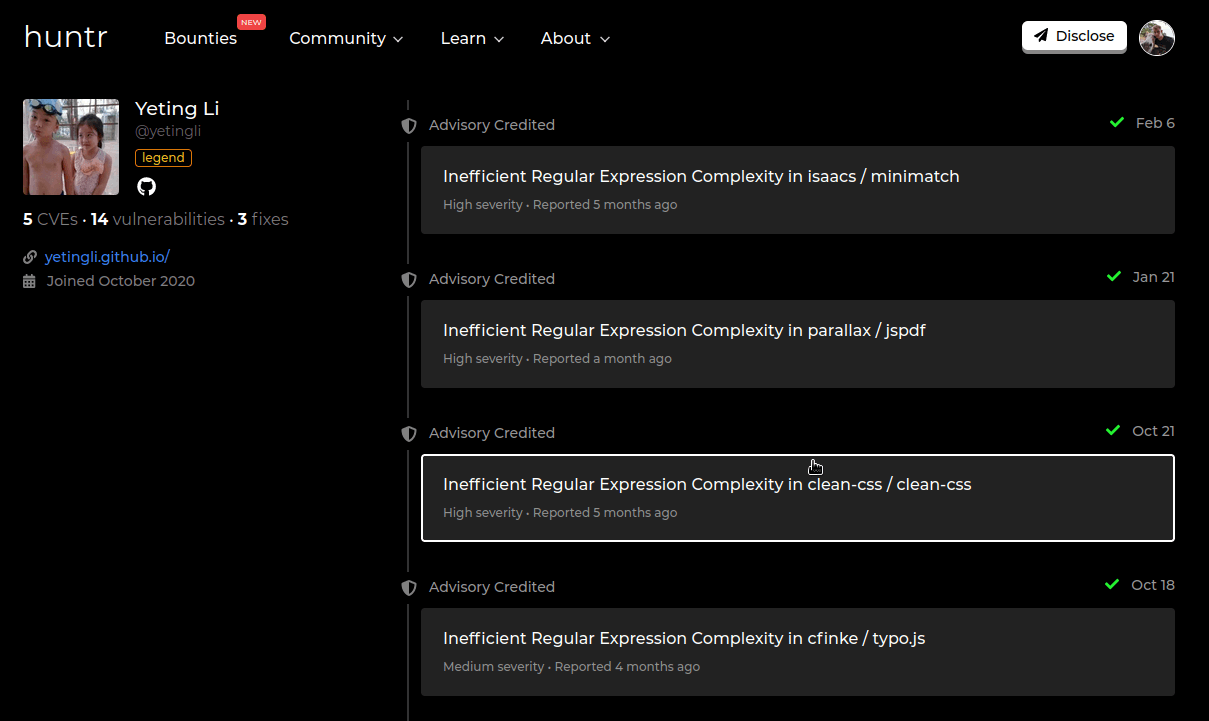

It however leads to bounty farming and this is what generally happens:

- A type of vulnerability is discovered (ReDOS)

- That vulnerability has an easy PoC (Proof of Concept)

- That vulnerable snippet is scanned across GitHub to find vulnerable repos.

- Those vulnerable repos are submitted to Huntr.

- Huntr reaches out to repo for privacy to disclose and resolve.

- However, researcher can also be the fixer to claim 2 bounties (report & fix)

In the example above, the user responsible for discovering and fixing this issue has found this same type of issue in plenty of other repositories.

Is this wrong? Well this is where we get into debatable territory. I will never deny that fixing security issues is a wrong thing, but how its done in this example bothers me.

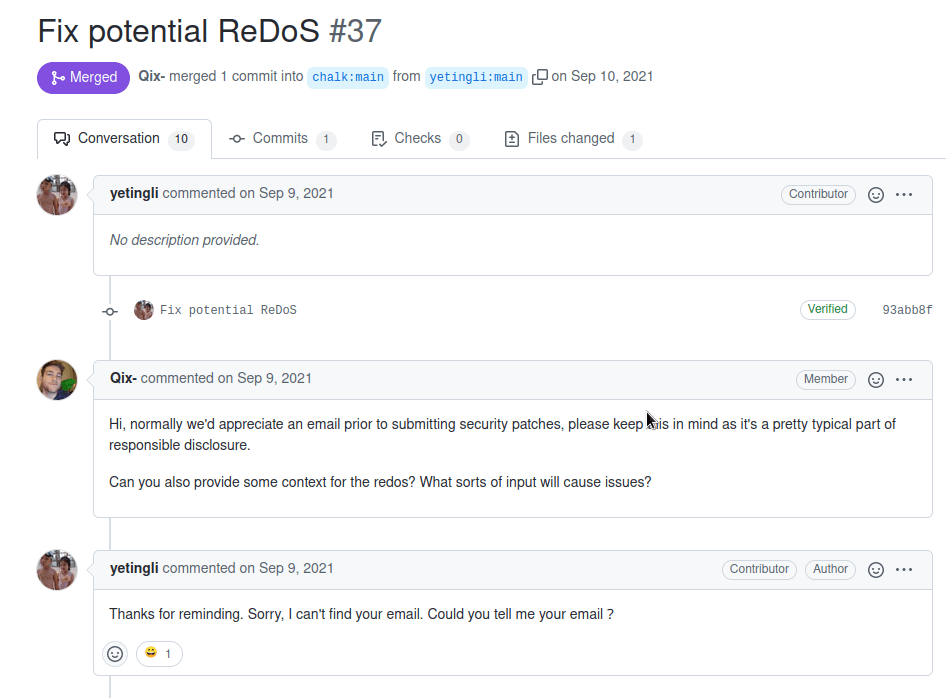

Lets look at some timestamps. On September 9, 2021 the Huntr disclosure was created - we can't tell if any contact occurred, but we know the date it was created. That date also aligns to the same day that the researcher submitted a public fix to the repo.

This is the wide open public GitHub. Where is responsible disclosure? Why did Huntr not enforce a secure method of disclosing?

Though, lets talk about the vulnerability itself and how its treated. It got a 7.5 HIGH rating, which is quite severe in the scale of things. So for a non-technical person being told they have a HIGH known not patched vulnerability is a tough conversation to have.

Now I have to understand that not everyone using this package is using it for the same reason I am, so perhaps this severity is earned given a different situation.

Though from my perspective of this vulnerability - its rating is absurd and so is the exploit. Not a single use of this package I use is for user input and if someone has physical access to my development machine to properly exploit this flow - they won't! They will do far worse things.

Though, whether this is my client, my hobby project, my work or my boss. This HIGH rated vulnerability will be forever plaguing the repository and be included on reports, exports and compliance until patched even if I don't agree.

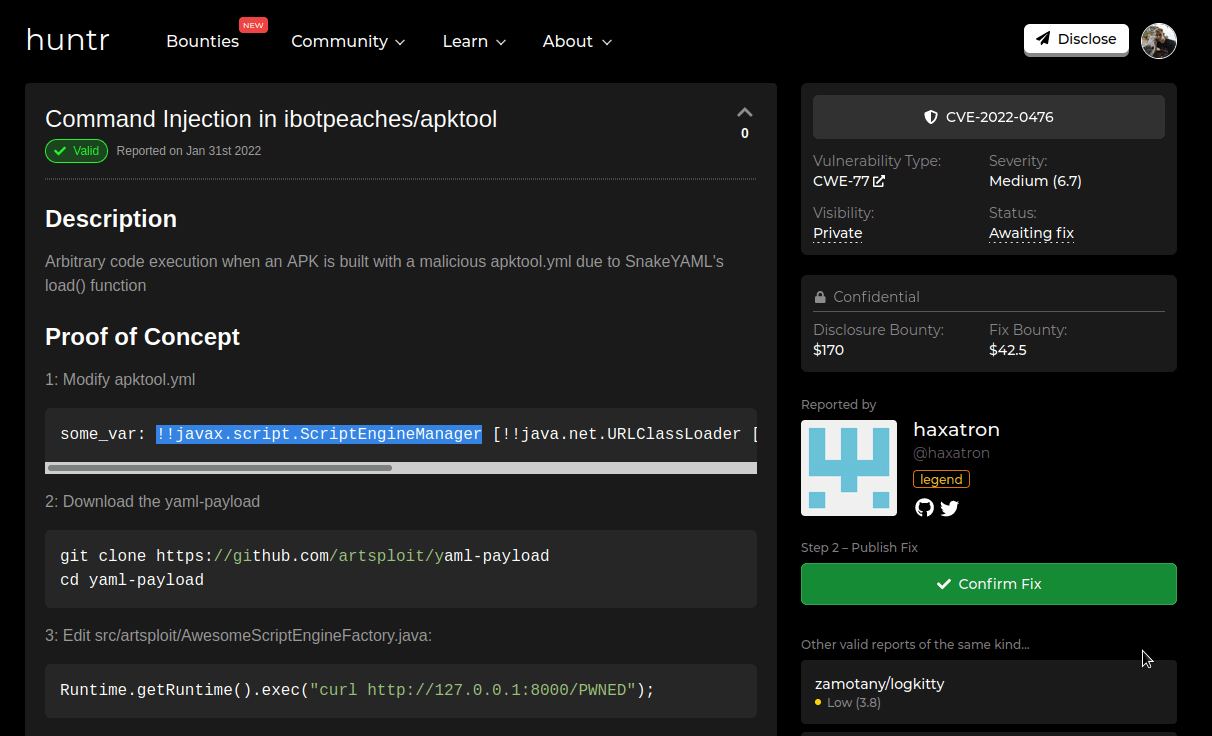

So lets pivot to why I think this occurs, because guess what - Apktool has a currently unreleased un-patched vulnerability through Huntr as well.

Above is what I see as the maintainer of Apktool, I got a suspicious email from a service I believe to be a scam and that led to me to this screenshot above once I joined.

A researcher (who is the top 3 of Huntr) had found a vulnerability in Apktool and was rewarded $170 for the effort. Which given the explanation I'm about to give is way too much especially since Apktool has only netted $244 profit in 10+ years. So I find it especially odd that someone can make nearly 70% of what I made in a decade for a simple search on GitHub.

Let me explain. This vulnerability is a natural extension in SnakeYAML of parsing user input YAML, which could lead to executing code. This was patched with a simple SafeConstructor which basically built out each supported scalar datatype so a malicious YAML file would not be executed.

The researcher suggests that I just fix 1 line of code here and all my problems will go away. The issue is we already use a custom constructor in Apktool to handle character sets easier in an attempt to thwart resource obfuscation, so I have to adapt it more carefully to patch this issue.

However, it won't work cleanly. I'm not well versed in YAML parsing, because we actually do resolve to a configuration class, which is now rejected. So now I spend a few hours looking into how to explicitly allow some classes, but reject the rest. I quickly realize the little time I have for Apktool is not worth spending time on this.

Though lets look back at the reported vulnerability. The vector here is first you must disassemble an application with Apktool using apktool d. Once disassembled, Apktool generates a apktool.yml file to store configurations. It writes that file itself from configurations passed in during disassembly and looks like this.

!!brut.androlib.meta.MetaInfo

apkFileName: Dialer.apk

compressionType: false

doNotCompress:

- classes.dex

- resources.arsc

- png

- webp

isFrameworkApk: false

packageInfo:

forcedPackageId: '127'

renameManifestPackage: null

sdkInfo:

minSdkVersion: '30'

targetSdkVersion: '29'

sharedLibrary: false

sparseResources: false

unknownFiles:

usesFramework:

ids:

- 1

tag: null

version: 2.6.1-ddc4bb-SNAPSHOT

versionInfo:

versionCode: '2900000'

versionName: '23.0'This helps Apktool know what to do during the build process. Apktool makes it and reads it. So this vulnerability is then modifying the above file to be malicious and Apktool will execute it between that process.

Lets talk about how that is supposed to happen. If you are disassembling an APK on your own machine. The vector here would require read/write access to your own machine in order to modify the apktool.yml. These files are NOT shared around - they are built on runtime of the decode operation.

So you can see the vector for abusing this is so difficult. If you had file-system access to a victim's computer - why would you wait for them to build a malicious apk? You'd obviously do much more damage if that is the access you had.

So no, I don't agree with the money paid to the researcher nor the severity given to it. Even after discussing with the researcher - I could only drop this vulnerability from 7.0 to 6.7 (MEDIUM). Which is still a bounty vulnerability worth $170.

Why can't that money go towards fixing real bugs? Issues that plague the use of the tool and not a wrongly classified security issue that will now hang above Apktool until resolved.

You can tell I'm not happy about this and this is why.

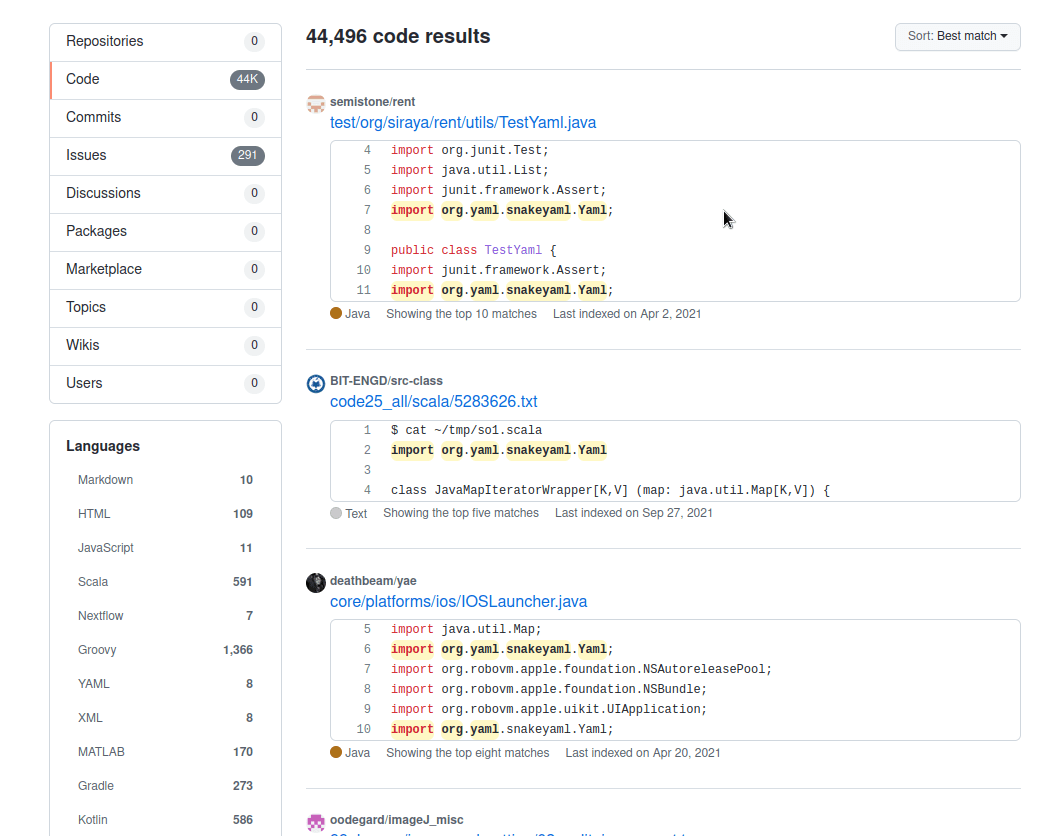

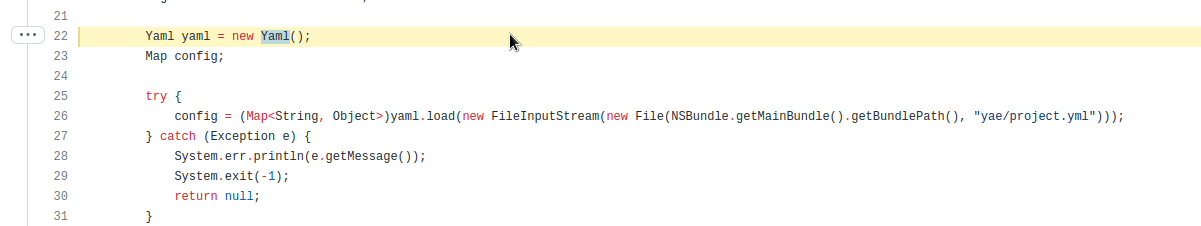

One simple GitHub search and I found 44,496 results. The first one looks to be a test file so I don't care. The 2nd one is a text file so I don't care. Lets look at the third.

This appears to some file responsible for loading configurations and looks vulnerable to this same issue.

So do I just go down this list of 44k results and look for vulnerable uses of SnakeYAML? Seems like if I can get $170 for a confirmed issue and can work quickly - I can make some serious money just reporting every single repository I find that does this incorrect and could probably average 6-10 an hour.

I did not join Huntr (As an Apktool maintainer) and this security issue did NOT follow my requested guideline for security issues. So if that is true for me, I guess it doesn't matter if this "YAE" program, in the above screenshot, does either - I should be able to win a bounty by reporting this.

You see whats happening? I'm now pretending to chase bounties for a leaderboard/money and don't actually care about secure software anymore. In this example I just found - who would maliciously modify a configuration file that the program makes itself?

No one. They wouldn't.

If they had the access required to do that vulnerability - they would do a world of different things. I don't have to say that though, I can just report a security issue and let the platform (Huntr) determine the severity itself.

So to try and close this thought process and blog post. We are getting hit hard with CVEs and the problem is they are coming in batches fast and often. Even if the severity is miscalculated and difficulty of executing even more.

This will lead to engineers ignoring these vulnerabilities when the entire purpose of this evolution of platforms was to encourage users to patch them. Since when a brand new project installed says it has over 10 not patched vulnerabilities - the system is broken.